- Token Terminal

- Posts

- Token Terminal x Google

Token Terminal x Google

Enabling app-level analytics on Solana public data

Over the past year, Token Terminal worked closely with Google to improve the scalability and usability of Solana’s public data on BigQuery. Today, we’re announcing the first release of major upgrades to the Solana Community dataset hosted by Google Cloud Web3.

This update includes:

Query only the days you need, so users can analyze recent Solana activity by scanning only the specific days they care about.

Simplified app-level analytics, organizing high-volume Solana instruction data so application-specific queries scan far less irrelevant history.

Public datasets for “Internet Capital Markets”, public decoded datasets on BigQuery for top Solana venues: pump.fun, Jito, Raydium, Orca, Marinade and Jupiter.

“When launched in 2023, the goal was accessibility. Two years and 140 million blocks later, we’ve evolved the Solana BigQuery dataset into precision public tooling for Internet Capital Markets. Token Terminal’s decoders turn raw onchain chaos into structured, instruction-level insights—giving every builder the deep microstructure transparency once reserved for sophisticated teams. I look forward to seeing how the Solana community uses these powerful new primitives.”

Together, these changes make public Solana analytics materially cheaper and easier to run for common workloads, especially single-day and application-focused analysis.

Google provides the BigQuery public dataset infrastructure and global serving layer, while Token Terminal contributed key optimizations and decoded data layers that make application-level analysis practical on open infrastructure. The result is a public Solana dataset that is significantly more efficient to query at ecosystem scale, and that remains a public, community-supported resource provided on an as-is basis.

For Token Terminal, Solana is the first chain in a broader effort to make high-quality, publicly queryable L1/L2 data more accessible on Google Cloud. We plan to extend this work to additional networks over time so developers, researchers, and institutions can build on transparent onchain data with lower cost barriers and richer application-level coverage.

The problem

When Solana was added to Google Cloud’s BigQuery public dataset program, it was a huge first step for open access to high-throughput blockchain data. Solana’s pace and scale are unique, with new data landing roughly every 200ms, so making it publicly queryable required careful design from the start.

The initial rollout pushed infrastructure and schema thinking to evolve through collaboration between the Solana Foundation, Google Cloud, BCW Group, and Token Terminal. It made archival Solana data broadly available to the community and created a base for accessible research and rich insights.

As usage grew and Solana’s activity continued to scale, two areas became clear opportunities to improve the dataset for day-to-day analytics at ecosystem scale:

Partitioning granularity needed to match real-world usage

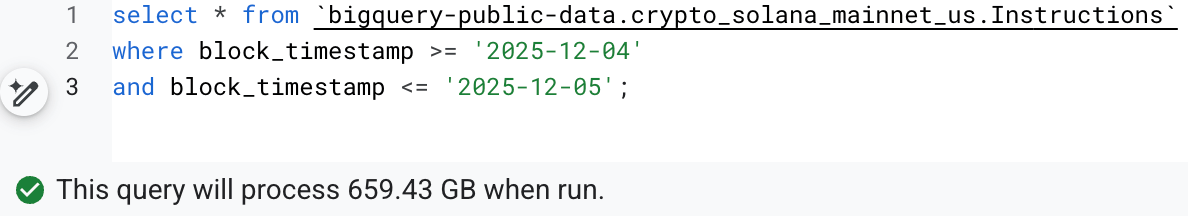

Core tables were originally partitioned monthly, which was a sensible starting point for a new public dataset. But many common workflows operate at daily cadence. As Solana continued to run at roughly a 200ms block pace, we saw an opportunity to collectively push the limits of public data infrastructure by moving to finer-grained partitions.

In the worst cases, running routine one-day analyses could cost over $100 per query, even when users were only trying to look at the most recent activity.

Before daily partitions, a single-day query could scan an entire month, costing $100+ per run in the worst case.

App-level analytics needed a simpler and cheaper path

The public dataset made Solana instruction history accessible, but isolating activity for a single application still meant scanning large sections of the Instructions table. Because protocol-specific queries required scanning broad instruction history without proper clustering, improving how those queries were targeted became important for affordability.

In the worst cases, routine app-level analysis could also cost over $100 per query, which made these workflows hard to run regularly on public infrastructure.

Before clustering, even app-level single-day queries could scan a full month of data, making them unnecessarily expensive.

Collaborating in the open

Working together with Google, we rolled out upgrades to Solana’s public BigQuery dataset that make open Solana analytics far more cost-efficient and practical at application level. The changes are already live for anyone querying Solana on BigQuery.

Query only the days you need

The dataset originally used monthly partitions, which meant users often had to scan (and pay for) an entire month even when they only needed the last day or two. We migrated core tables to daily partitions, so end users can query recent Solana activity by scanning just the specific days they care about, instead of full-month partitions.

Impact: in our benchmarks, day-level and incremental workflows are now over 10× cheaper because they only touch the relevant daily slices. In practice, that means a one-day query now comes in at roughly ~$4 per run.

With daily partitions live, a single-day query now costs only about $4 per run.

App-level analytics now simplified

To make application-specific analysis affordable, we introduced clustering on the highest-volume tables, especially clustering the Instructions table by executing_account. This allows users to isolate activity for a single Solana app without scanning broader instruction history.

Impact: by adding clustering to key columns, app-focused queries have already become over 1000× cheaper. In our benchmarks, an app-focused instruction query now costs about ~$0.023 per run.

After clustering, the same app-focused one day query scans about 3.75 GB, bringing cost down to roughly $0.023 per run.

Decoded public datasets in BigQuery

Alongside the cost upgrades, we’ve deployed a custom, version-aware Solana decoder that can decode instructions against any historical IDL version. This lets us produce consistent decoded datasets across a program’s full history.

On Solana, this matters because IDLs change frequently. For fast-moving apps it’s common to see dozens of historical IDL versions over time, and a decoder that only understands the latest version can miss or misinterpret older instructions. For example, Jupiter alone has accumulated 40+ historical IDL versions to date.

Using this framework, decoded datasets are now live on the Google Cloud Marketplace for pump.fun, Jito, Raydium, Orca, Marinade and Jupiter.

Impact: Each dataset exposes the most common, analytics-critical instructions for that application. This lets teams analyze real function calls directly in BigQuery, like Raydium and Orca swaps, Jito claims, or Marinade deposits, without building their own decoding pipeline.

Try it yourself

To make these upgrades as useful as possible in practice, we’re sharing two ways to work with decoded Solana data on BigQuery. Both approaches are live today and can be tried end-to-end in Colab.

Option 1, DIY decoding in BigQuery (developer-focused)

For Solana developers who prefer to DIY, we’ve built a set of example functions and reference libraries that show how to extract complex, app-level events directly in BigQuery. This path is ideal if you want full flexibility, or if you’re working with a program we haven’t published as a pre-decoded dataset yet.

We’re excited to see what builders create with warehouse-native decoding, from custom analytics and ML feature generation to MEV research and real-time strategy backtests. Alternatively, you can implement your own decoding logic directly inside BigQuery using user-defined functions (UDFs).

User-defined functions let you:

keep your workflow entirely in SQL and BigQuery

decode instructions at scale without running a separate Python pipeline

iterate quickly on custom schemas and program-specific logic

extend decoding to new programs as needed

In other words, UDF-based decoding gives developers a data warehouse-native way to go from raw instructions to structured tables, without having to maintain an external decoding service.

→ DIY Colab walkthrough: solana_decoding_demo_developers.ipynb

Option 2, ready-to-query decoded datasets (analyst-focused)

If your goal is to analyze applications rather than build decoding infrastructure, you can use Token Terminal’s public pre-decoded datasets live on the Google Cloud Marketplace.

This approach lets you:

jump straight into app-level analytics

query common instructions (swaps, deposits, claims, etc.) immediately, avoiding maintenance of IDLs, schemas, or decoding logic

rely on the optimized public tables underneath for lower cost and better performance

It’s the fastest path for analysts, researchers, and teams who want decoded outputs without needing to build or own the decoding stack.

We’re also planning to make a subset of these decoded tables available directly in the public dataset early next year, so even more of the ecosystem can experiment with decoded Solana data out of the box.

→ Decoded datasets Colab walkthrough: solana_decoding_demo_analysts.ipynb

Looking ahead

Solana’s public dataset was a major first step for open analytics on BigQuery. With these upgrades live, the ecosystem now has a foundation that is affordable to use for real app-level research at scale.

This is the first example of what public access to leading blockchains can look like when performance and usability are treated as first-class design goals. We’re excited to keep building on this momentum with Google, expanding decoded coverage and bringing the same public-first approach to more ecosystems over time.

If you’re building on Solana, start with our Colab examples and let us know what you want to see next.

And if you’re a Solana project interested in making your onchain data publicly available through the Google Cloud Marketplace, reach out to us at [email protected].